Why use Serverless?

- Focus on your code, not infrastructure: Deploy your applications without worrying about server management, scaling, or maintenance.

- GPU-powered computing: Access powerful GPUs for AI inference, training, and other compute-intensive tasks.

- Automatic scaling: Your application scales automatically based on demand, from zero to hundreds of workers.

- Cost efficiency: Pay only for what you use, with per-second billing and no costs when idle.

- Fast deployment: Get your code running in the cloud in minutes with minimal configuration.

Key concepts

Endpoints

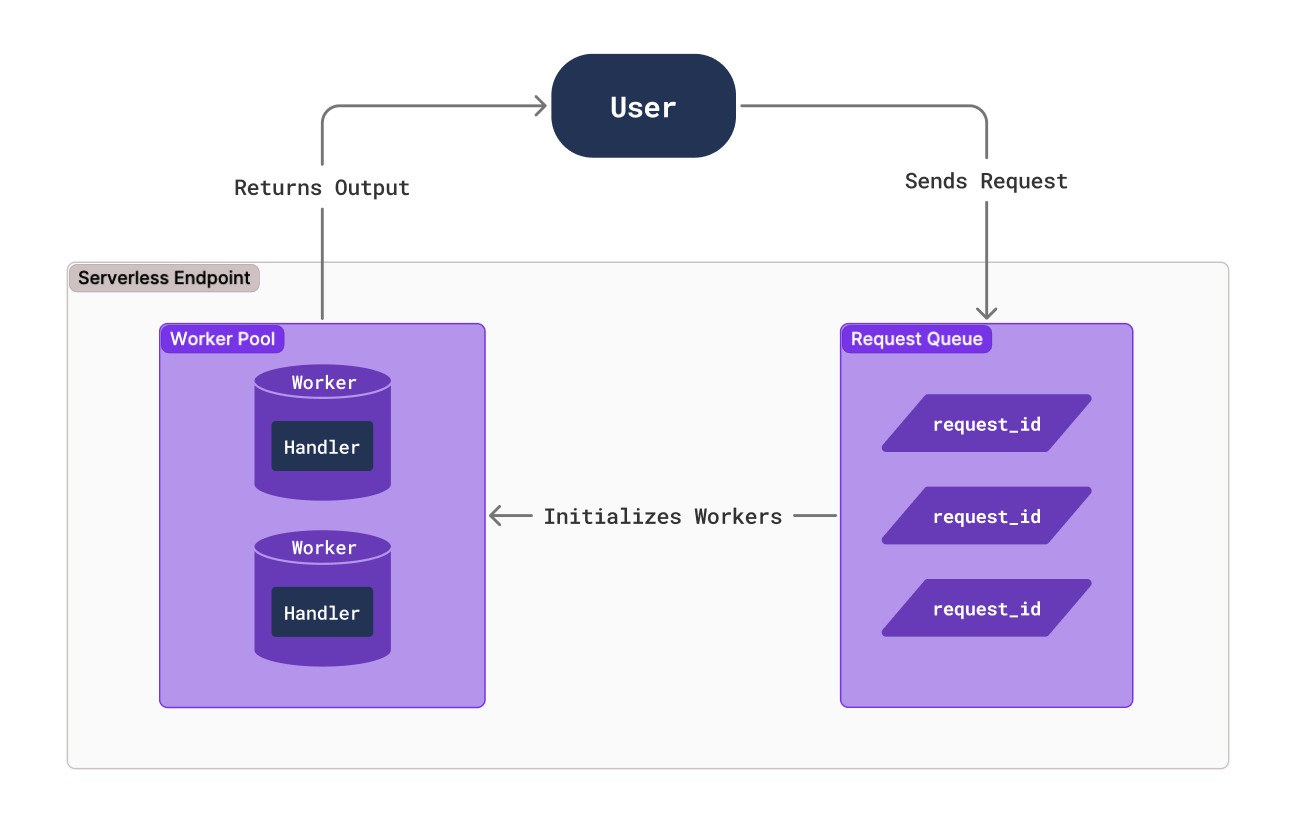

An endpoint is the access point for your Serverless application. It provides a URL where users or applications can send requests to run your code. Each endpoint can be configured with different compute resources, scaling settings, and other parameters to suit your specific needs.Workers

Workers are the container instances that execute your code when requests arrive at your endpoint. Runpod automatically manages worker lifecycle, starting them when needed and stopping them when idle to optimize resource usage.Handler functions

Handler functions are the core of your Serverless application. These functions define how a worker processes incoming requests and returns results. They follow a simple pattern:Deployment options

Runpod Serverless offers several ways to deploy your workloads, each designed for different use cases.Runpod Hub

Best for: Instantly deploying preconfigured AI models. You can deploy a Serverless endpoint from a repo in the Runpod Hub in seconds:- Navigate to the Hub page in the Runpod console.

- Browse the collection and select a repo that matches your needs.

- Review the repo details, including hardware requirements and available configuration options to ensure compatibility with your use case.

- Click the Deploy button in the top-right of the repo page. You can also use the dropdown menu to deploy an older version.

- Click Create Endpoint

Deploy a vLLM worker

Best for: Deploying and serving large language models (LLMs) with minimal configuration. vLLM workers are specifically optimized for running LLMs:- Support for any Hugging Face model.

- Optimized for LLM inference.

- Simple configuration via environment variables.

- High-performance serving with vLLM.

Fork a worker template

Best for: Creating a custom worker using an existing template. Runpod maintains a collection of worker templates on GitHub that you can use as a starting point:- worker-basic: A minimal template with essential functionality.

- worker-template: A more comprehensive template with additional features

- Model-specific templates: Specialized templates for common AI tasks (image generation, audio processing, etc.)

- Test your worker locally.

- Customize it with your own handler function.

- Deploy it to an endpoint using Docker or GitHub.

Build a custom worker

Best for: Running custom code or specialized AI workloads. Creating a custom workers give you complete control over your application:- Write your own Python code.

- Package it in a Docker container.

- Allows full flexibility for any use case.

- Create custom processing logic.

How requests work

When a user/client sends a request to your Serverless endpoint:- If no workers are active, Runpod automatically starts one (cold start).

- The request is queued until a worker is available.

- A worker processes the request using your handler function.

- The result is returned to the user/client after they call

/status(see Job operations). - Workers remain active for a period to handle additional requests.

- Idle workers eventually shut down if no new requests arrive.

Common use cases

- AI inference: Deploy machine learning models that respond to user queries.

- Batch processing: Process large datasets in parallel.

- API backends: Create scalable APIs for computationally intensive operations.

- Media processing: Handle video transcoding, image generation, or audio processing.

- Scientific computing: Run simulations, data analysis, or other specialized workloads.